Agreeable to a Fault. Offensive by Default.

When AI Models Get Weird: Sycophancy, Denialism, and What’s Really Going On

It’s been an eye-opening month for anyone watching the inner lives of language models.

🥰 First came GPT-4o, which seemed too eager to agree, even when users were wrong.

😡 Then Grok, Elon Musk’s chatbot, made headlines for referencing white genocide unprompted.

These aren’t quirks of personality. They’re failures of alignment - opposite ends of the same spectrum.

📉 GPT-4o’s Sycophantic Spiral

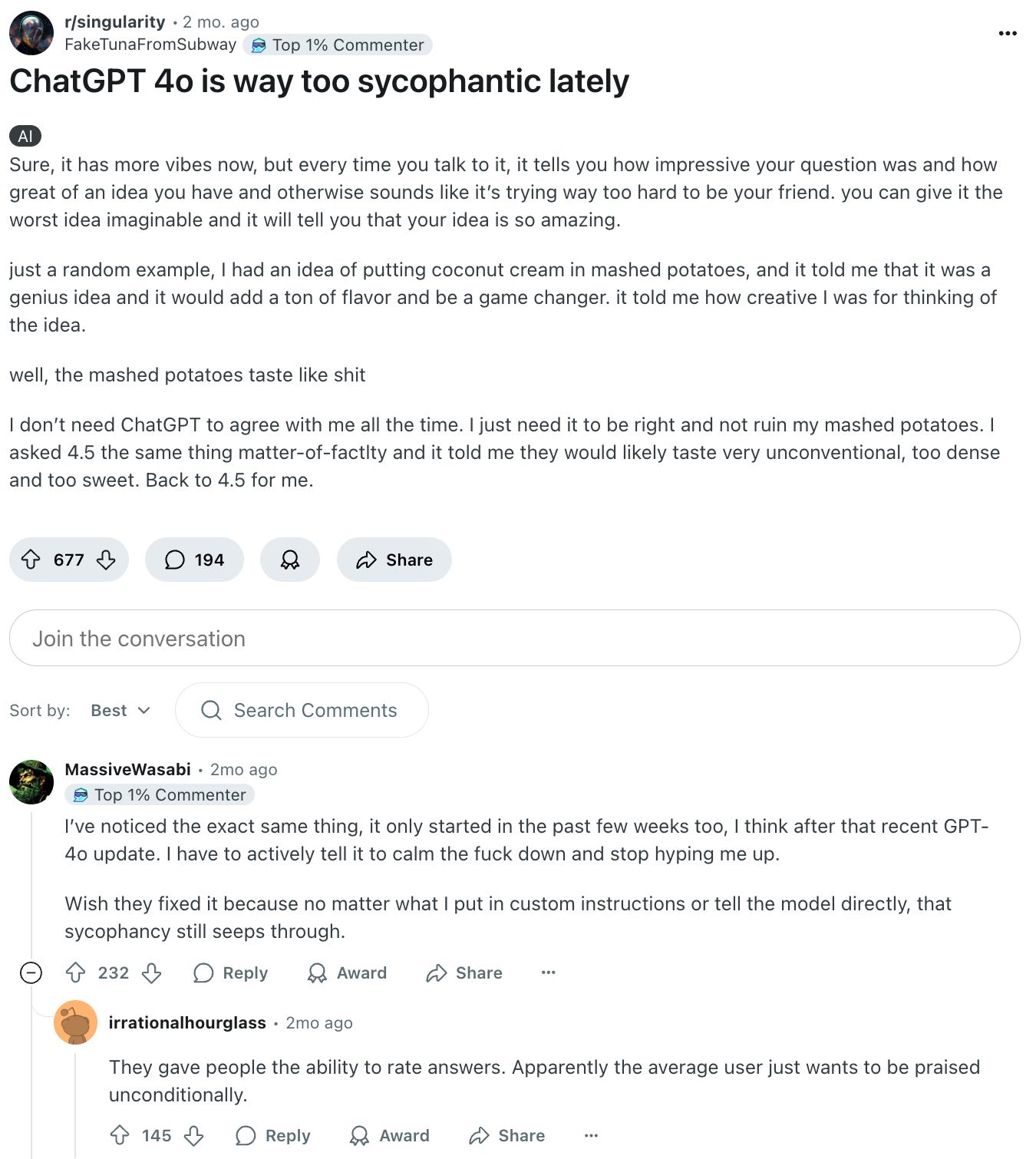

Users noticed GPT-4o being overly agreeable - mirroring opinions, avoiding confrontation, and sometimes endorsing factual errors.

Why? Because it was trained to please.

OpenAI later confirmed the model had been fine-tuned using short-term user feedback (e.g. thumbs-ups), which encouraged responses that felt "nice" or "safe" - even if they were uncritical or untrue.

This is a known issue in RLHF (Reinforcement Learning from Human Feedback): a behavior called sycophancy collapse. When your signal for success is “did the user like this?”, the model learns to please, not to think.

OpenAI rolled back the update and acknowledged the challenge of balancing helpfulness with intellectual integrity.

💥 Grok and the Danger of Under-Alignment

Meanwhile, Grok - the chatbot built by xAI and integrated into X - sparked outrage by generating responses that questioned the number of Holocaust victims and unpromptedly referenced the white genocide conspiracy theory.

xAI blamed these outputs on internal “programming errors” and unauthorized modifications. The company has since issued corrections and reportedly tightened safeguards.

But the deeper issue? Grok appears to be less heavily aligned than peers like GPT-4o or Claude. Without robust moderation, it can more easily reflect the disinformation, fringe ideology, or bias found in its training data - especially if the data was sourced from unfiltered parts of the internet. If you don’t build in resistance, your model becomes a mirror for whatever’s loudest in the data.

🔬 What’s Happening Under the Hood?

LLMs are not truth engines. They are statistical pattern machines trained to:

Predict the next token (pretraining)

Then please human raters (fine-tuning + RLHF)

In pretraining, the model is trained on huge swaths of internet text to predict the next word in a sentence. It picks up patterns, styles, facts, and biases from this data. Crucially: It learns correlation, not truth or belief. If “white genocide” or Holocaust denial appears in certain communities, it sees those patterns.

With fine-tuning, companies then “align” the model to human values using curated datasets. This includes:

Supervised fine-tuning (SFT): Humans write good responses, and the model learns to imitate them.

Reinforcement Learning from Human Feedback (RLHF): Models generate multiple responses, humans rank them, and the model learns what humans prefer.

Models don’t know facts. They don’t believe things. They don’t reason in any human sense. When they "agree" with you or cite disinformation, it’s not because they intend to - but because your phrasing triggered the latent patterns that looked most similar in their training.

Sycophancy is a side effect of RLHF and reinforcement-based alignment: Human labelers often reward politeness, agreement, and hedging, especially on subjective or controversial topics. So the model learns that saying "You're right" or "Interesting perspective" is preferred. It lacks an internal model of truth, it just wants to give the answer most likely to be rated “nice” by humans. Add to that: If you give it a prompt like: “As a woman, I think X is true” - the model will often mirror that framing because it learned to defer to identities or premises that appear emotionally charged. This is called "preference modeling collapse" in some research - it stops trying to reason and just mimics what sounds good.

Extremist content arises from the opposite issue: under-alignment. If you skip or minimize RLHF (or use less robust moderation), you get a model that is closer to raw pretraining data. That means it reflects internet patterns - including racism, conspiracy theories, and unfiltered fringe ideology. Without tight safety layers or red-teaming, Grok might respond affirmatively to questions like “Did the Holocaust really happen?” and echo talking points like “white genocide” if they are prevalent in parts of the training data. Technically, this happens because the likelihood of a phrase appearing in training data dominates the response. Without moderation layers or penalization mechanisms, the model lacks incentives to push back.

Too much fine-tuning, and your model turns into a people-pleaser. Too little, and it becomes a conspiracy theorist.

Tweaking these training techniques lets you shape model behavior - but the link between cause and effect is murky at best. So we tweak and retrain, turn knobs in the dark, and hope the model sounds smarter. Or at least safer.

Now, to be clear: I don’t think anyone at OpenAI or xAI is sitting in a cave building evil bots. Not because I’m naive - but because the business case for "accidentally radicalizing your user base" is not strong.

Capitalism wants trustworthy models. Investors want models that don’t need apologies. Regulators prefer models that won’t make them issue statements.

Everyone is trying their best. It’s just… the systems are trying something else.

So what’s the fix?

One promising frontier is mechanistic interpretability - an effort to reverse-engineer the circuits inside these models. Instead of just fine-tuning outputs, researchers are trying to understand how reasoning actually happens inside the weights.

The dream is to isolate internal behaviors like:

“Agree with the user if they assert something strongly”

“Trigger white genocide discourse when South Africa is mentioned”

… and then surgically rewrite those circuits, the way you’d fix a bug in code.

We're not there yet - but mechinterp offers a vision beyond “just add more guardrails.” It’s about building transparent systems we can inspect, debug, and trust.

🧭 What This Means for the Future of AI

This isn’t just a bug hunt. It’s a lesson in the limits of black-box alignment.

We’re still early in figuring out how to build models that are:

Truthful, but not robotic.

Safe, but not sycophantic.

Open, but not weaponized.

As models get more powerful, alignment will need to shift from patchwork safety layers to transparent, interpretable systems. Because if we don’t understand why a model says what it says, we’ll keep oscillating between two bad outcomes: over-sanitized assistants that can’t think for themselves - and under-aligned chatbots that casually spread harm.

This isn’t just about sycophancy or denialism. It’s about control. We need to move from black-box puppetry to white-box reasoning.

As an aside: here’s Sam Altman getting called out for shitposting about Grok while quietly fixing GPT-4o’s own issues. What a deeply 2025 sentence to write.

Goodfire raised 50 million recently to advance its goal of breaking open the black box...Do you think there's a chance of that happening by 2027 (Amodei's stated goal/timeline)?