East of AGI: The Future of Open-Source AI Is Made in China

Kimi, Qwen, and the rise of a parallel AI stack the West can’t afford to ignore.

This month, China shipped the best open-source LLM ever released. Twice.

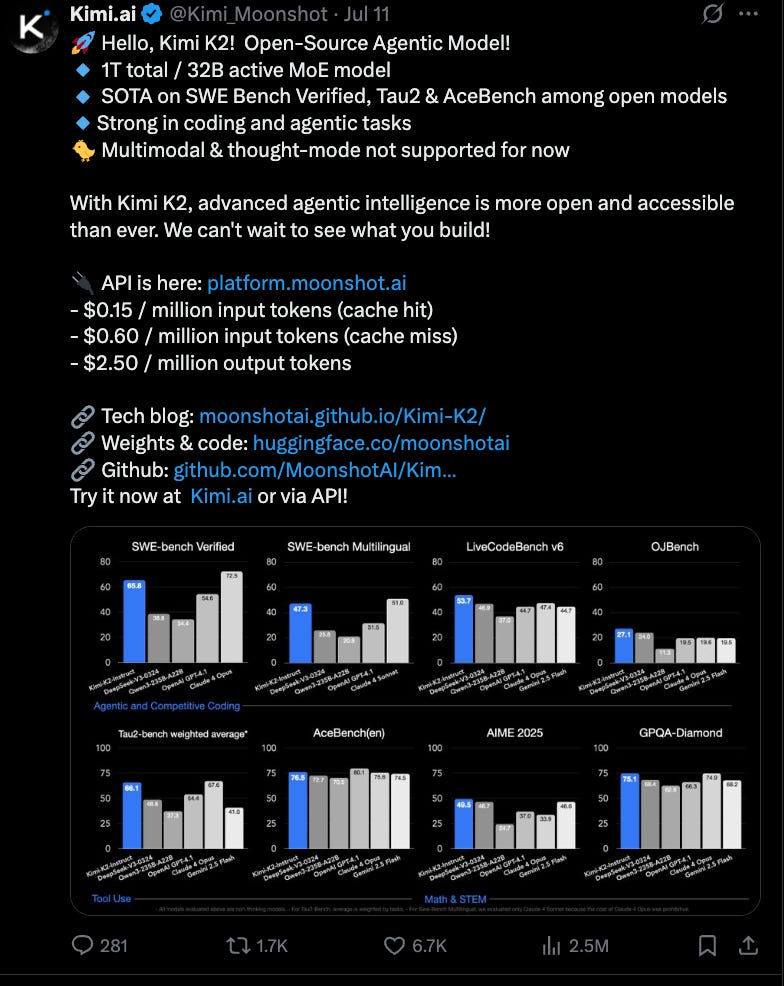

First came Moonshot’s Kimi 2 - a 400B+ parameter Mixture of Experts model with up to 2 million tokens of context in proprietary deployments (128K in the open release), trained from scratch and fine-tuned for long-document reasoning. It shot to the top of HuggingFace’s leaderboard, outperforming Meta’s LLaMA 3 70B and Elon’s Grok-1.

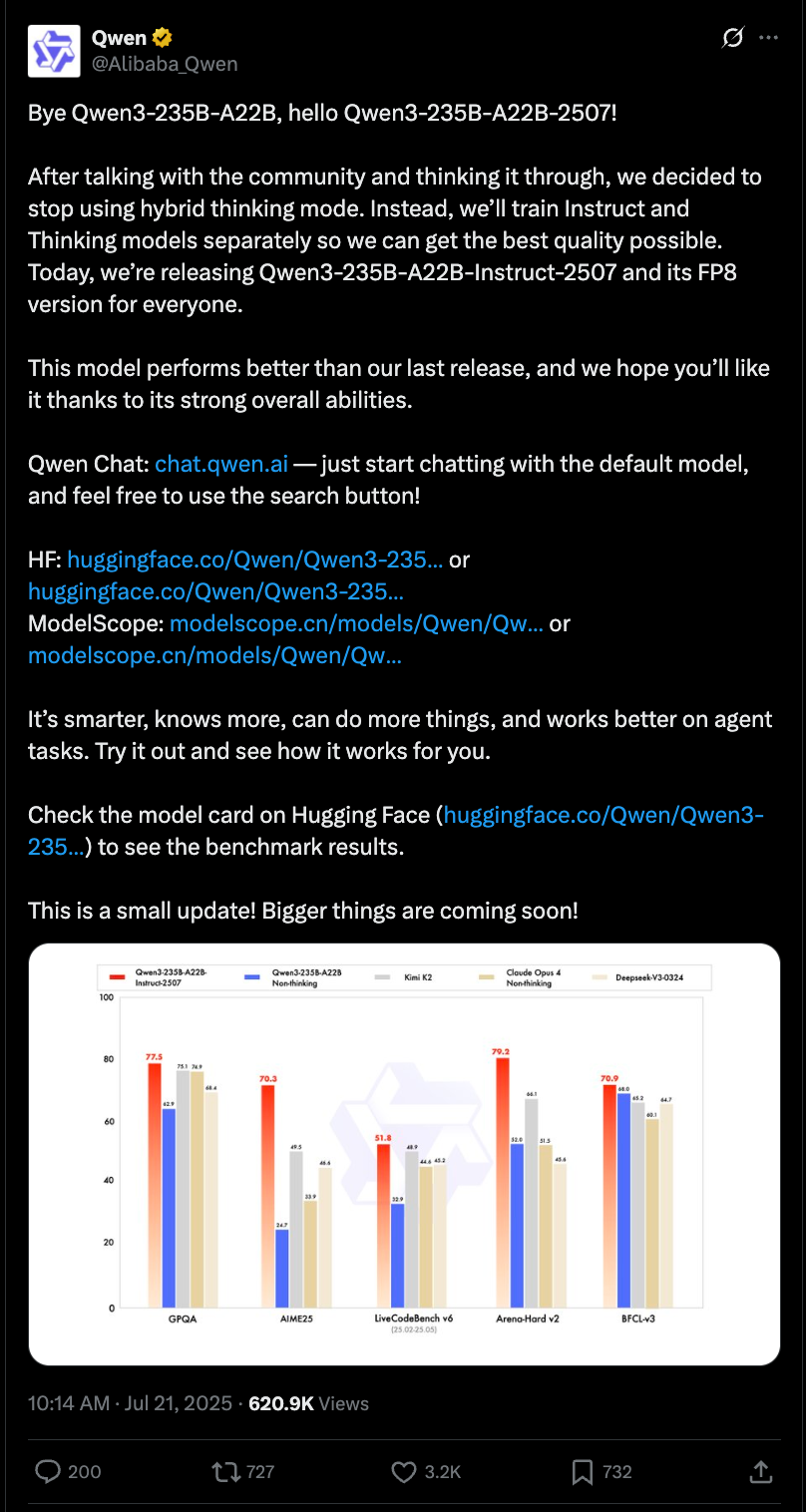

Then, just two weeks later, Alibaba’s Qwen3-235B-A22B-2507 dropped. A dense transformer model, fully open-sourced - training code, weights, tokenizer, the works - and now the highest-performing open-weight LLM to date, beating all others across MMLU, GSM8K, HumanEval, and ARC. Despite being roughly one-fourth the size, it proved faster, smaller, and smarter.

These aren’t just strong Chinese models. They’re better than everything else that’s open.

If you're only tracking OpenAI, xAI, Deepmind, Anthropic, Meta (OXDAM anyone?), you're missing half the map. Let’s talk about how China’s AI strategy is diverging from the U.S.:

(1) Different Foundations. Chinese labs aren’t just fine-tuning Western models - they’re building from scratch. Kimi and Qwen weren’t bootstrapped from GPT-2 or pre-trained in English. They’re native-born models, optimized for:

Chinese-language tasks

Long-context reasoning

Mobile-first deployment

Moonshot’s Kimi is built for cognitive labor, not chatroom banter. It’s a document-native agent - compressing legal contracts, summarizing financial reports, answering across sprawling PDFs. Think less "conversational AI," more "AI that reads 10,000 pages so you don’t have to."

(2) Different Form Factors. The Western paradigm centers on chat-first UX: Copilot, Claude, ChatGPT. In China, LLMs live inside superapps: WeChat, Taobao, DingTalk. The interface is less visible, more embedded - generating invoices, rewriting legal terms, creating marketing copy inside workflows. The user doesn’t always know they’re using an LLM - and they don’t care. The value is functional, not philosophical.

(3) Different Constraints.

U.S. labs benefit from:

Best-in-class GPUs (A100/H100)

Global API distribution

English-language web data

Loose alignment requirements

Chinese labs face:

Export restrictions on advanced chips from the US

No access to OpenAI, Anthropic, or Gemini APIs

Stricter regulatory oversight on outputs

But constraints breed innovation. Chinese models are built to be efficient, deployable, and sovereign. And they iterate fast, often weekly.

(4) Different Strategic Advantages.

China has:

Data access: Massive consumer internet footprint + government records = rich pretraining sources.

State support: Government subsidies for compute, training, and foundation model development.

Enterprise pull: Urgent demand for AI across logistics, finance, manufacturing - sectors where LLMs aren’t toys, but tools.

Centralized velocity: Close coordination between state, academia, and private labs accelerates deployment.

The Ministry of Industry and Information Technology (MIIT) has already registered 40+ foundation models for public use - creating a semi-regulated AI stack that scales.

The strategy is simple: deploy vertically, tune for domestic use cases, build sovereign infrastructure, and win adoption, not acclaim. And the results are showing.

Moonshot AI, the team behind Kimi, was founded in 2023 and is backed by HongShan (formerly Sequoia China). They’ve reportedly surpassed 10M users and are raising at a $3B+ valuation.

Alibaba DAMO Academy, behind the Qwen series, is building a full-stack LLM ecosystem. Qwen2-72B outperforms all open dense models today- and it’s fully Apache-2 licensed. This is not just a research flex - it’s a productized core for Alibaba Cloud, Taobao, and enterprise AI offerings.

01.AI, Zhipu, Baichuan, MiniMax are all racing forward with distinct approaches to model scaling and agents. While the West continues to chase AGI, China is deploying AI that works - at scale, for billions, inside the operating systems of everyday life. We’d be foolish to ignore it.

You’re one of the very few that talk about China. Thank you!

I’d add to your analysis: Chinas has (will have) cheap access to energy. It’s often overlooked but AI is extremely greedy and only economies with cheap energy will be able to leverage AI to its fullest potential; the US has a lot of energy available but no/little new exploration with shale production plateau-ing; they’re also slow to adopt nuclear where Chinese are way ahead. Economic growth requires cheap energy.