For the past 18 months, Google has been the punchline in the AI narrative it helped invent.

OpenAI captured consumer mindshare. Microsoft locked in enterprise deals.

Google? It shipped some forgettable Bard variants and got memed into irrelevance as the genius who couldn’t execute.

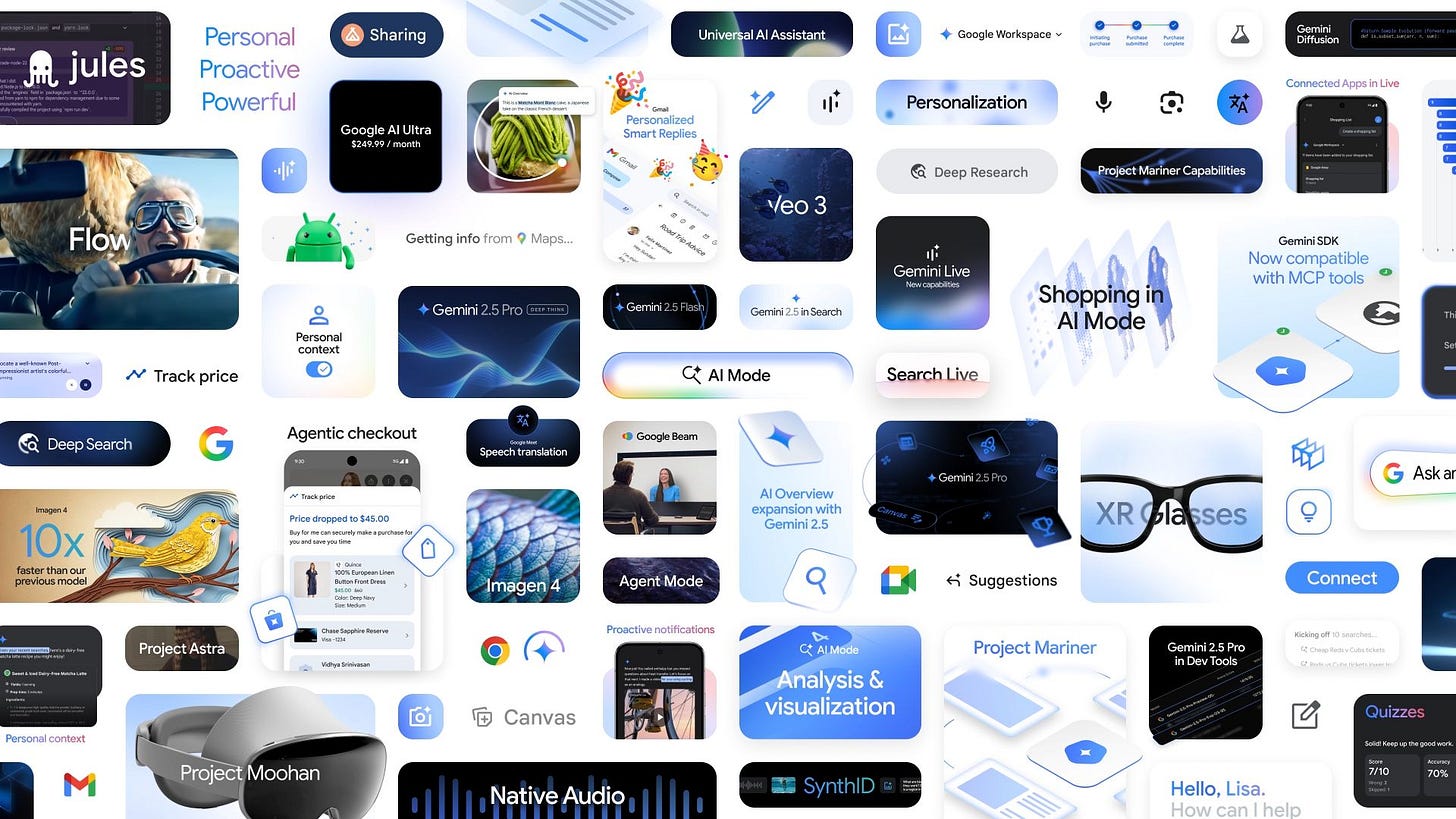

That started to shift earlier this year. But I/O 2025 was Google’s first full-scale counterattack - and it was overwhelming in both scope and intent. Across every product line, from Search to Android to YouTube to Gmail, Gemini didn’t just show up. It showed up everywhere.

So much mind-bending stuff was announced that it’s hard to even know where to begin - which, in itself, is the headline. They’ve thrown the kitchen sink at their haters. Let’s dig in.

1. Search, Rewritten from the Inside Out

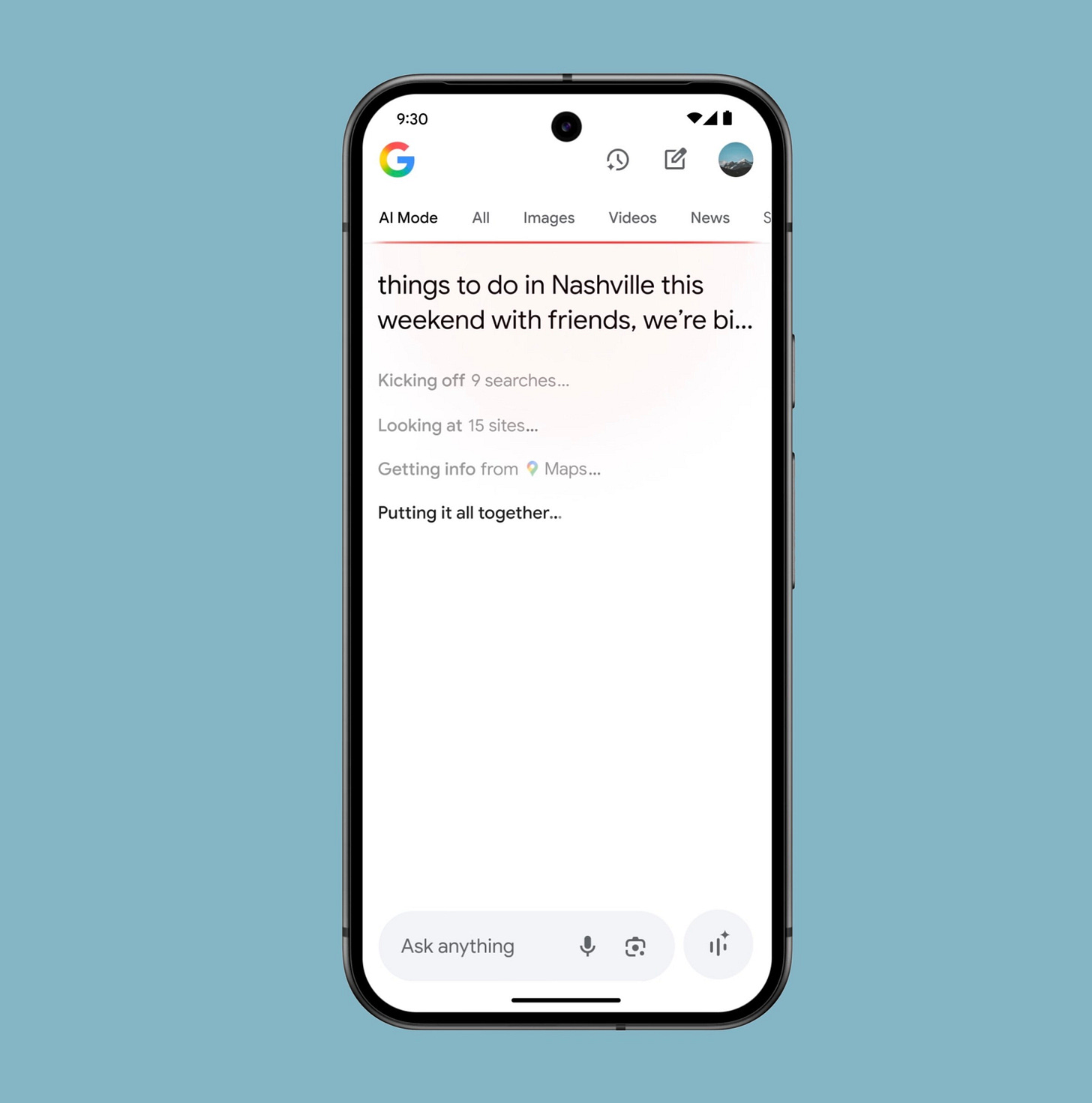

The most disruptive product at I/O wasn’t a model. It was AI Mode - Google’s quiet demolition of its most profitable interface: the search results page.

AI Mode replaces the familiar sea of blue links with a Gemini-powered conversational interface. Ask a question, follow up, refine your query, branch off, take action - all without ever leaving the page. It doesn’t just find sources. It summarizes the internet, tracks your intent, and guides you through a session. It’s not “search” anymore. It’s co-navigation.

Google is blowing up its own business model before anyone else can. The company cracked the glass on its oldest interface and said the quiet part out loud:

“The search results page was a construct.”

The future isn’t ten blue links. It’s a synthesized, contextual, task-completing dialogue. A full-stack redefinition of how people access - and increasingly, skip - the web.

For two decades, search monetization was built on sponsored links and click-throughs. AI Mode sidelines both. Users stay on-page. Answers are synthesized, not sourced. The open web becomes a backend.

The entire search economy - SEO, publishing, affiliate marketing, even web architecture - is now in the blast radius.

Google isn’t waiting to see how it plays out - AI Overviews already serve 1.5B+ queries a month. AI Mode is now rolling out across the U.S.

AI Mode also introduces two new entry points:

Project Mariner: a web agent that completes tasks for you

Search Live: a real-time camera-based search you interact with by showing, not typing

The real innovation isn’t technical. It’s cultural. Google is doing what few incumbents ever dare: risking its core business model to meet the moment.

2. Great Gets Better

Lost in the I/O avalanche was another gem: Google has made Gemini 2.5 Pro which was by many standards the best model in the market, significantly better.

Enter Deep Think: a new mode that simulates multiple lines of reasoning before committing to an answer. It’s Google’s clearest answer yet to hallucination, brittle logic, and the “one shot, hope it works” dynamic of most LLMs.

Built on Google's research in parallel thinking techniques, Deep Think lets the model explore multiple hypotheses before deciding how to respond. The result? Significantly improved performance on tasks that trip up most LLMs - like multistep math, logic-heavy code generation, and open-ended multimodal reasoning.

Next up, enter Gemini Diffusion - a new experimental research model that could redefine performance.

Traditionally, text generation relied on autoregressive transformers - fast, but often shallow. Google flipped the script by adapting diffusion models, previously used in image generation, to language. The result is a generative process that refines outputs over time, like iterative reasoning baked into the architecture.

Early tests show it’s 10–20x faster than traditional methods while maintaining coherence. If this scales, it could be a key structural evolution.

3. AI Everywhere All At Once

Google's AI strategy isn’t about building one mega-agent to rule them all.

It’s about turning every product you already use into an intelligent surface.

Gemini isn’t a destination - it’s a layer. And it’s quietly colonizing the Google suite:

In Gmail, it drafts in your tone, summarizes threads, and even cleans your inbox.

In Docs, it helps you write with structure, not just style.

In Android, it uses your camera, mic, and screen to understand context and respond in real time.

In Chrome, it explains complex web pages, answers questions, and interacts with sites on your behalf.

In Meet, it brings real-time speech translation - not someday, but now.

And all of it is powered by the same Gemini brain, just projected through different surfaces. Google isn’t asking users to come to Gemini. It’s letting Gemini come to them.

This is ambient AI done right - not another assistant, but a distributed layer of intelligence that turns static software into living systems.

4. AI Native Creative Stack

For years, generative creativity felt like a magic trick. One-shot images. Short, soundless clips. Cool, but chaotic. But yesterday, Google moved the industry beyond party tricks and unveiled production tools.

Veo 3 is Google’s most advanced generative video model to date - and it’s stunning. Veo doesn’t just create video. It understands cinematic grammar:

Camera movement (e.g. dolly shots, zooms, pans)

Scene coherence across shots

Stylistic control (e.g. 16mm film, drone flyovers, Wes Anderson vibes)

And here’s the jaw-dropper: natively generated, synchronized audio

That last point is a massive leap. Audio isn’t stitched on - it’s generated in tandem. Footsteps match motion. Wind rises as the camera pans across trees. Dialogue? Not yet perfect, but it’s coming.

Imagen 4 also leveled up - now with text rendering, better typography, and high-resolution image control that rivals the best in the market (and is beating most of them on Elo scores).

Then there’s Flow - Google’s new AI-native filmmaking interface. It connects Gemini, Veo, Imagen, and generative music into a single tool. You storyboard in natural language. It assembles scenes. You tweak the pacing, change the vibe, and export. It's Final Cut Pro, run by prompts. Google didn’t just ship the tech - it shipped the studio.

5. Moonshot City

I/O wasn’t just about infusing AI across surfaces but hinted at new surfaces.

Let’s tart with Google Beam (née Project Starline), a telepresence system that ditches flat screens for full-dimensional presence. It’s not just Zoom in 3D. It’s telepresence reimagined - depth, eye contact, and spatial audio in real time. The hardware is still bulky, but the ambition is clear: make remote presence feel present.

Then there’s Project Aura, Google’s smart glasses prototype built with Xreal. Think real-time translation, environmental context, and a Gemini overlay that interprets what you see - all running on your face. Translate this menu. Navigate that subway. Who needs a phone when your lenses can think?

Even software creation is getting reimagined:

Stitch, built on their Galileo AI acquisition, turns prompt into Figma, building production-ready UIs - no designer, no dev.

Jules lets you describe a change and commit it directly to GitHub - no IDE, no keyboard.

But the most intriguing project isn’t hardware. It’s Project Astra. Astra is DeepMind’s vision for a persistent, multimodal agent. It sees through your camera. It listens through your mic. It reasons in real time and remembers what you’ve shown it. It’s not a chatbot. It’s not a product. It’s the glue between surfaces - and the substrate for agentic computing.

Google I/O 2025 was extraordinary - technically, strategically, even culturally.

But it was also overwhelming.

The company didn’t just unveil a roadmap. It aired a decade of ambition in a single sitting - and in doing so, it blunted the impact of breakthroughs that should have dominated the cycle.

Groundbreaking drops like Gemini 2.5 Pro Deep Think and Veo 3 deserved their own news cycles. Instead, they got buried under Jules, Stitch, Flow, Beam, Live, Flash, Pro, Astra, Ultra, and maybe a partridge in a pear tree.

It’s classic Google: World-class research. Underwhelming narrative control.

Even the market response told the story. The stock dipped during the keynote. Only the next day, once analysts had time to parse the deluge, did it begin to rebound.

And yet - once the fog clears, the signal is loud: Google isn’t playing defense anymore. And just showed how dangerous it can be when it plays offense.