GPT-5 and the Lost Art of Managing Expectations

A solid upgrade, sunk by its own sales pitch.

GPT-5 dropped last week. It’s a solid upgrade over GPT-4 - especially in agentic workflows and integrated tool use - but the story is as much about expectation vs. reality as it is about benchmarks.

Sam Altman sold it as a “significant step” toward AGI and “smarter than us in almost every way.” The consensus? Incremental, not transformative. OpenAI may have brought us the stars, but Sam had promised the moon - and people were not happy.

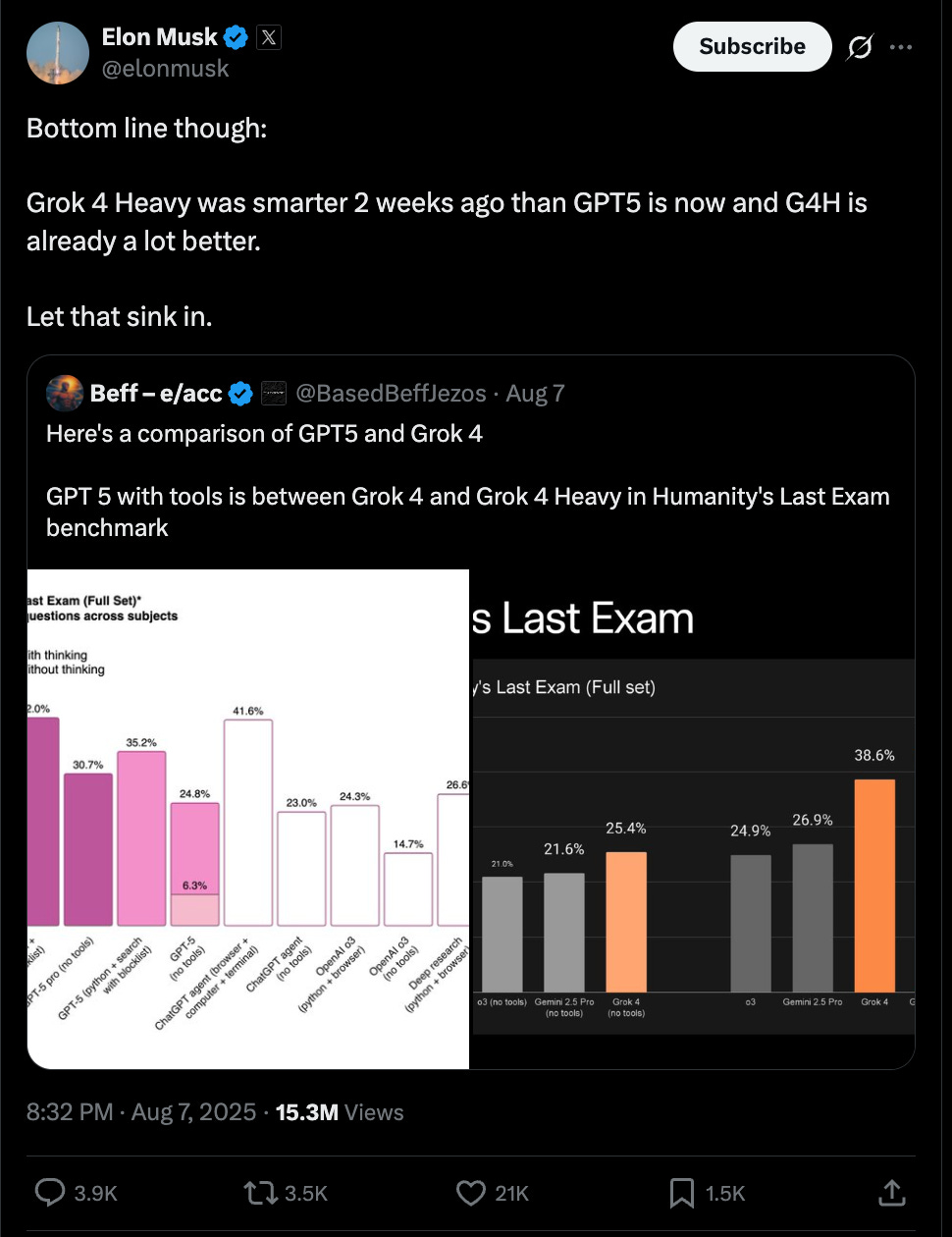

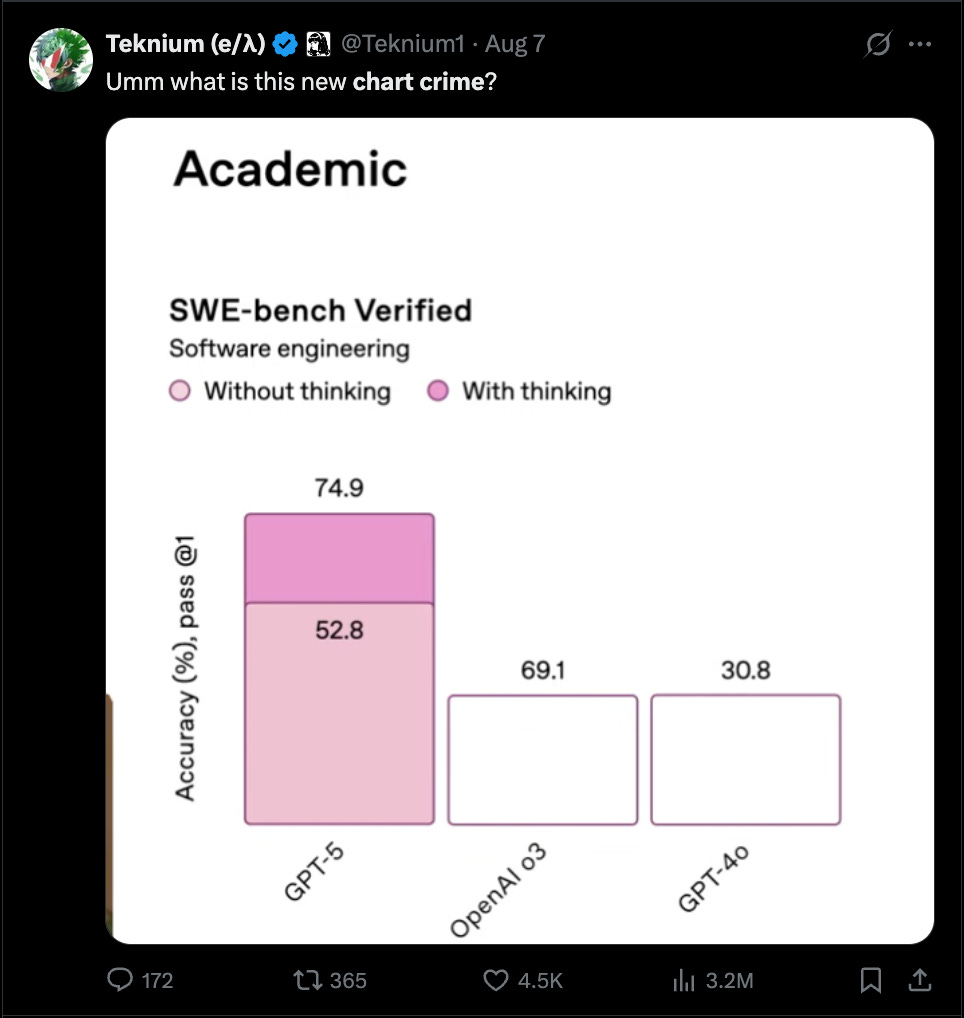

The live launch event did not help. There were awkward charts (Twitter called them “chart-crime”), Elon Musk live-tweeting how Grok 4 beats GPT-5 on some benchmarks, and a demo that managed to mangle the Bernoulli Effect - which is one of those things you don’t want to get wrong if you’ve just claimed to have built a PhD-level expert in everything.

The over-promise has so dominated the conversation that it’s obscured what GPT-5 actually is. So let’s set aside the comms postmortem and look at the product:

1️⃣ Tool calling as a first-class capability

Tool use is no longer bolted on, it’s part of GPT-5’s reasoning loop. It can call multiple tools in parallel, merge results mid-flow, and maintain context over long-running tasks. Cursor, Vercel, and Notion all report workflows that would have stalled on GPT-4 now run to completion.

Why it matters: Moves LLMs from reactive assistants toward autonomous systems, with latency and orchestration gains for production-grade agents.

2️⃣ ModelPalooza ends, routing takes over

No more picking between GPT-4o, o3, and friends. ChatGPT now routes queries automatically: lighter prompts hit faster, cheaper models; harder ones trigger “thinking” modes that burn more compute.

Why it matters: Simplifies UX, optimizes cost/performance, and gives OpenAI more control over inference paths without forcing user-visible changes.

Caveat: After backlash, Sam Altman said GPT-4o will return for Plus users. Auto-routing stays the default, but “choose-your-own-model” survives as a premium nostalgia perk.

3️⃣ Pricing turns aggressive

At $1.25/million input tokens, GPT-5 matches Gemini 2.5 Pro and undercuts Claude Opus 4.1 by over 10×. Output tokens are similarly priced. Smells like the start of a pricing war to me.

Why it matters: This is market-share offense - attractive to developers and enterprise buyers, and direct pressure on Anthropic’s coding stronghold.

4️⃣ Tiered rollout and upsell logic

GPT-5 Mini is the new default for free ChatGPT users. Paid tiers unlock GPT-5 Pro and GPT-5 Thinking with higher limits and deeper reasoning. Education and Enterprise accounts get access next week.

Why it matters: Free access cements lock-in at consumer scale; tiers drive upgrades. Staggered enterprise rollout lets OpenAI monitor and tune before wide deployment.

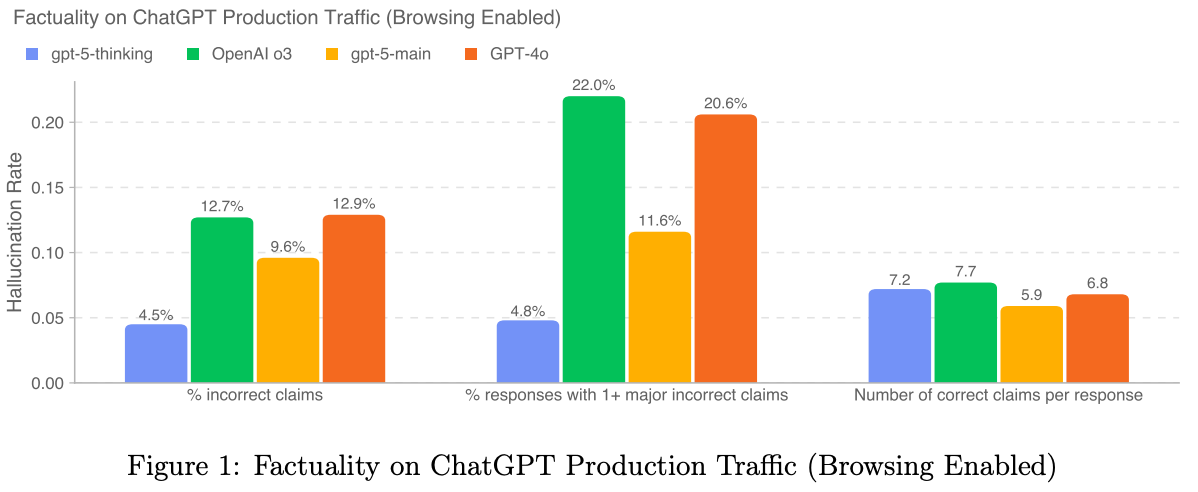

5️⃣ Hallucination rate improves, but not solved

GPT-5 hallucinates ~9.6% with web access and ~4.5% in reasoning mode. New “safe completions” replace blanket refusals with partial, policy-compliant answers

Why it matters: Accuracy remains the gating factor for unsupervised automation. Verification, whether OpenAI’s “universal verifier” or external layers, is still essential.

The Final Word

GPT-5 outperforms competitors on some benchmarks and falls slightly behind on others. Analysts call it evolutionary; vocal users call it a “downgrade” in tone and style. Prediction markets now give Google an edge for “best model by August.” On Reddit, the mood is that Google will “cook OpenAI” with Gemini 3. Elon says his next model is ready.

My take? The LLM arms race is converging. Whoever holds the “best model” crown by year-end will likely win by inches, not miles - and that edge will be fleeting. Calling GPT-5 incremental misses the slope of the curve: we haven’t been idle for two years since GPT-4, we’ve been compounding capabilities across the entire field. The water’s heating up fast, fellow frogs, and if you’re waiting for a single big splash, you might not notice we’re already in a rolling boil.

Insightful analysis — but I’d challenge one point. Labeling GPT-5 as merely ‘evolutionary’ risks overlooking how native tool-calling, aggressive pricing, and improved factuality could be game-changers in production environments. The shift from reactive assistance to agentic orchestration isn’t flashy, but it fundamentally changes what developers can build on top. Would be curious to hear your thoughts on whether we might look back on GPT-5 as the real inflection point once the ecosystem catches up.

I noticed a reduction in hallucinations as I used it over the weekend - although I found myself using 5 in agent mode almost exclusively so I created a theory that it was better (hallucinating less) when used in an agent focused way. at which point I thought maybe I was just hallucinating that… ;)