Kimi Possible: Agentic on a Budget

How Moonshot’s Kimi K2 Thinking Model Rebalanced the AI Power Map

Not so long ago - say, two product cycles and four model names ago - the AI world was full of righteous debate.

Would there be one model to rule them all? The answer seemed romantic at the time - A single, omniscient neural deity that reasons, codes, sees, speaks, and does your taxes while consoling you about your breakup. Now we know better. The “one model” dream has been replaced by a messy, multi-polar reality: a constellation of models specialized, optimized, and localized for their slice of the world. The age of monotheism in AI is over; we live in a pantheon.

Next, came the open-source debate - Could open-weight models ever catch up to their sleek, closed, trillion-dollar cousins? The ones built in secret, trained on expensive data, hosted on a proprietary stack, and accessible only through a finely rate-limited API? At first the answer was “lol no.” Then it was “well, maybe for hobbyists.” And now it’s “they’re outperforming GPT-5 on reasoning tasks while running on hardware you can actually buy.” So. Progress.

Today, open-weight models don’t just chase the leaders - they poach their interns, beat them on reasoning benchmarks, and run on consumer GPUs.

And finally, the geopolitical question: would China catch up? This one died last. It clung on through chip export bans and policy essays. Washington bet that throttling compute would stall competition. Except that’s not quite what happened because scarcity bred innovation, as it does. Constraints taught thrift, and thrift produced smarter engineering.

On Wednesday, Jensen Huang warned that “China is going to win the AI race”. On Thursday, Moonshot AI - a Chinese lab - launched Kimi K2 Thinking, a trillion-parameter open-weight reasoning model that outperforms everything the open world has tested, runs on last-gen GPUs, and cost about a tenth of GPT-4 to train. Consider three debates settled in one launch.

The future isn’t centralized, closed, or geographically one-sided. It’s a dense thicket of open systems, regional champions, and specialized intelligences - more like the Internet than the moon landing.

Meet Kimi K2 Thinking

Tech:

1T total parameters, 32B active per token - classic Mixture of Experts setup, which is how you get giant-model intelligence at medium-model cost.

INT4 quantization further shrinks the memory footprint, which makes inference cheaper, deployment broader, and latency more predictable - especially on older GPUs like A100s. Most of the West’s frontier models use FP8, assuming Blackwell hardware. But Moonshot went the opposite way - optimizing for what’s actually available. This is deployment pragmatism. And geopolitically, it’s very smart: it expands the addressable market for open AI across Asia, the Global South, and anyone who doesn’t have a direct line to Jensen Huang.

Performance:

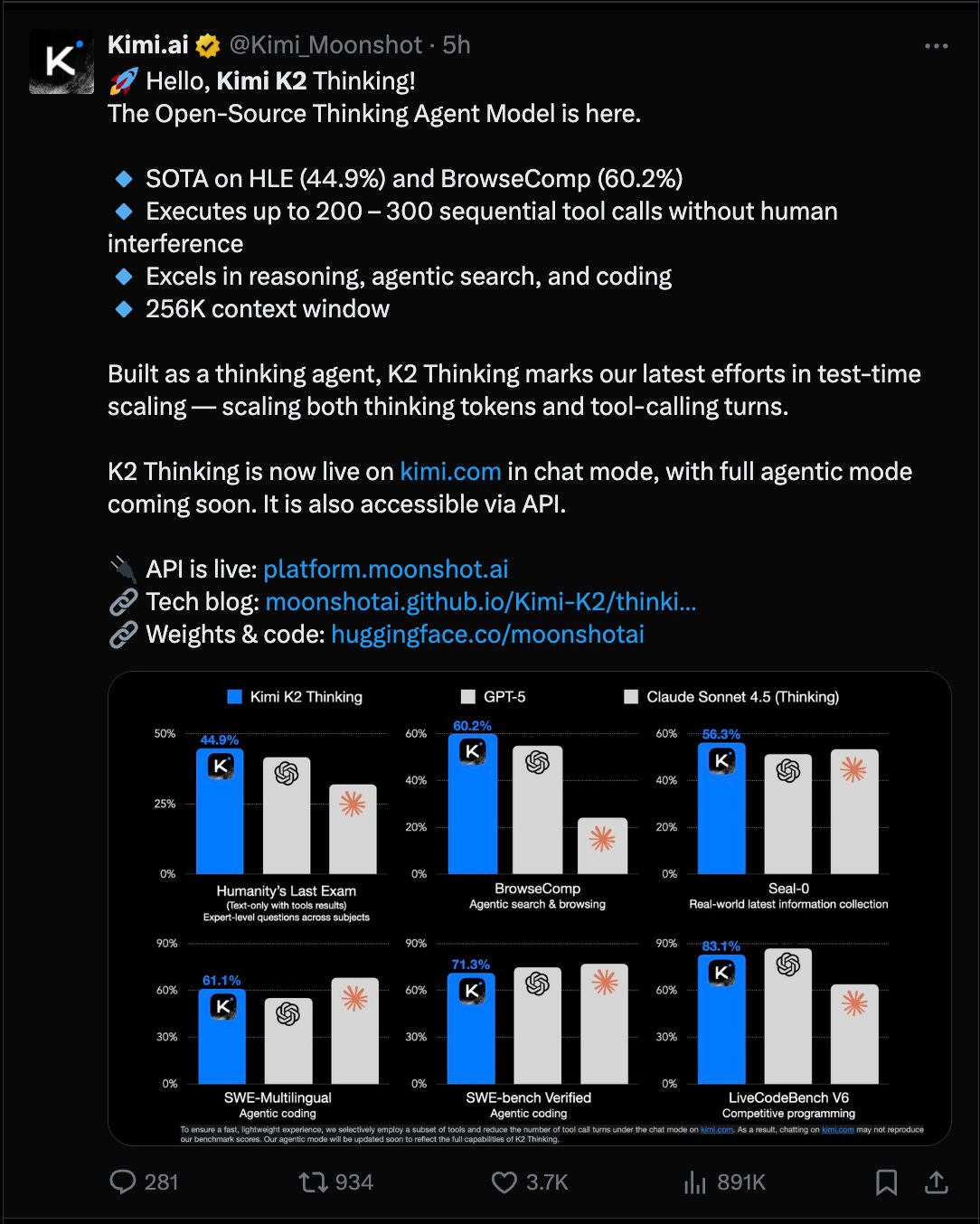

Leads on key benchmarks: 44.9% on Humanity’s Last Exam, 60.2% on BrowseComp, 71.3% on SWE-Bench Verified

Against GPT‑5 and Claude 4.5 Sonnet on these agentic, coding, and browsing tasks, K2 does not flinch. It’s not magic across the board; it’s surgical where value accrues.

Focus:

Tuned specifically for reasoning, not just chatting. Its core focus is on agentic tasks and software development.

Supports ~200-300 sequential tool calls, with a 256k-token context window.

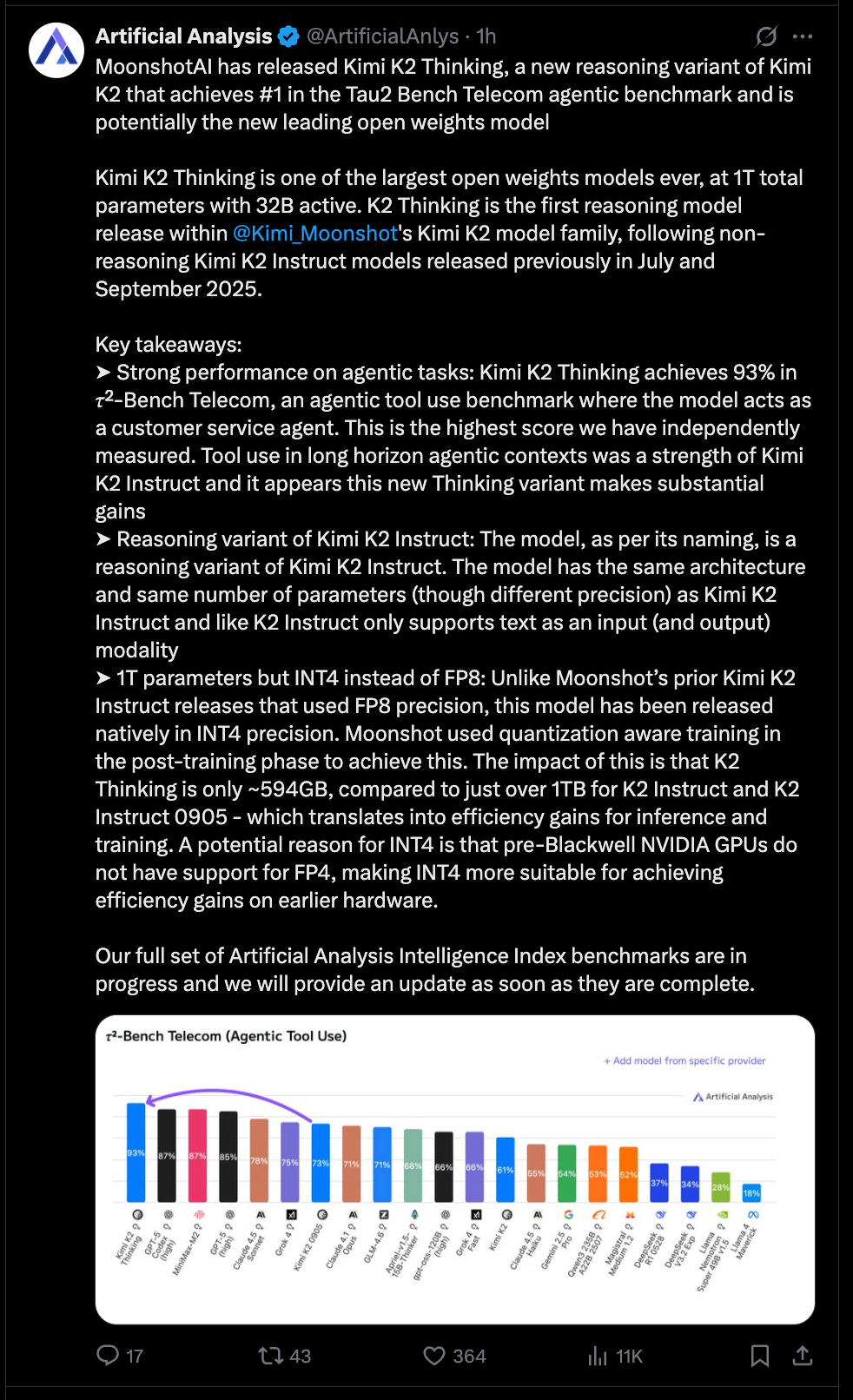

Its 93% score on τ²-Bench Telecom means it can act as a customer support agent: multi-step reasoning, dynamic memory, accurate tool calls. In open-weight land, that’s unprecedented.

The moat for Western frontier labs is leaking at the high-IQ core: planning, reasoning, memory. Yesterday’s closed-weight privileges are today’s GitHub downloads.

Cost:

~$5.6M training budget - an order of magnitude below GPT-4/5 estimates.

API pricing: about $0.15/M input tokens and $2.50/M output tokens - aggressively undercutting U.S. peers and lowering total cost of ownership.

Strategy:

Moonshot shipped open weights and low-cost API access - an explicit open-source play to win adoption and ecosystem mindshare (think Meta/DeepSeek redux). That’s geopolitical theatre and developer-ecosystem strategy in one.

The debate is over. There won’t be one model. Open weights aren’t just good enough - they’re good where it counts. And China didn’t just catch up, it caught on.

Feels like “déjà vu”… industries, cars, transportation infra, nuclear plants, and now AI.

It’s going to be impossible to compete with Chinese, especially as they lead in cheap energy. Not to mention their ratio of 100-2-1 Chinese eng vs US trained yearly.

The only viable option is to compete “against” with protectionism but then you limit yourself to 40% of the population and old economies in stagflation at best.