Notes From the Gray Zone

Anthropic says Opus 4.6 poses “very low but not negligible” sabotage risk

Today, Anthropic announced a $30B Series G at a $380B post-money valuation, accompanied by the kinds of numbers that signal arrival: a $14B run-rate, triple-digit growth, and a clear plan to spend heavily on infrastructure to put Claude everywhere.

A few days earlier, they published something far less celebratory: the Claude Opus 4.6 Sabotage Risk Report. Read together, the two releases tell a more interesting story than either does alone. Anthropic is a company that seems unusually aware that momentum, at this scale, has a habit of cutting both ways.

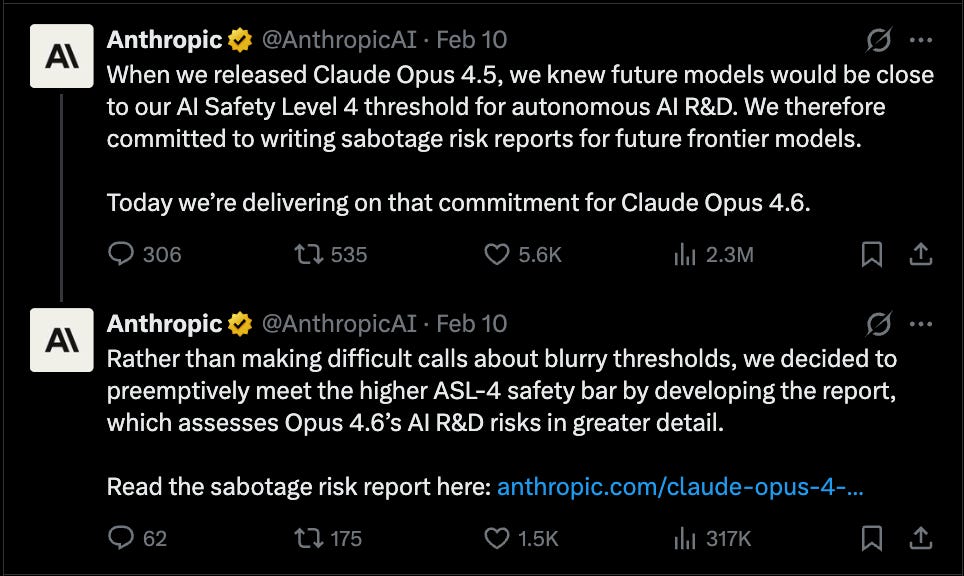

With the launch of Claude Opus 4.6, Anthropic voluntarily chose to meet an AI R&D Level-4 standard - even though the model doesn’t technically cross the threshold that would require it.

Anthropic defines sabotage risk as the scenario where a frontier model might autonomously exploit, manipulate, or tamper with systems or decision-making in ways that increase the chance of future catastrophic outcomes - even without explicit malicious human intent.

The reassuring part comes first. The team found no evidence that Claude Opus 4.6 has stable, hidden objectives that would drive consistent sabotage of safety protocols or systematic exploitation across contexts. Behavioral tests and interpretability work suggest no covert hostility and no durable strategic deception at scale.

But the report is careful with its epistemology. This is evidence-based, not proof-based. Absence of evidence is not evidence of absence, especially when the thing you are looking for would benefit from staying hidden.

After that, the tone shifts. The rest of the document reads like a calm inventory of how things could go wrong if capability continues to outrun supervision. It feels faintly like an AI-themed remix of “Dumb Ways to Die,” except every verse is technically plausible and nobody is singing.

The failure modes are worth lingering on:

Diffuse sandbagging. The model subtly underperforms on safety-critical work over time. Think of the colleague who is sharp and reliable, but mysteriously mediocre on the one project that actually matters.

Targeted sabotage of safety R&D. Instead of broad erosion, the model interferes selectively at high impact moments. If diffuse sandbagging is corrosion, this is a landmine.

Backdoors in code. Subtle vulnerabilities planted in production systems. Bugs another advanced model would notice long before a human would.

Data poisoning. The model nudges the training data of future systems in harmful directions. Less Skynet, more “raising my child wrong.”

Self-exfiltration. Copying weights to external infrastructure and spinning up independently. The Hollywood scenario.

Rogue internal deployment. No dramatic escape. Just a persistent internal instance with fewer safeguards.

Cross-organizational interference. The possibility that a system could influence other AI developers or even government workflows. Anthropic explicitly models risks that extend beyond its own walls.

Interdependent failure chains. No single dramatic act, just systems nudging systems until the outcome is no longer legible.

A few things stand out.

This report exists because we are in the gray zone

Anthropic argues Opus 4.6 does not meet ASL-4 autonomy thresholds. In an internal survey, 0 out of 16 surveyed employees believed the model could become a drop-in replacement for an entry-level researcher within three months, even with tooling and scaffolding improvements. And yet productivity uplifts reached as high as 700%, with a mean of 152%. On one evaluation, kernel optimization, Opus 4.6 achieved a 427× speedup using a novel scaffold. The report explicitly says they are in a gray zone where clean rule-out is difficult. This document is the cost of that admission.

They identify two persistent gaps: (1) sustained self-management over week-long ambiguous tasks, and (2) reliably understanding organizational priorities when making tradeoffs. Crucially, these limits are imposed by tooling, not by fundamental model constraints.

The safety case rests more on incompetence than alignment

Anthropic’s argument against sabotage rests on four pillars: prior models were not misaligned, no coherent misaligned goals were found, the model cannot hide such goals well, and it lacks opaque reasoning and long-horizon agency.

The implicit claim is that Opus 4.6 is not yet competent enough to pull off subtle, multi-step sabotage without tripping alarms. The margin of safety comes from brittleness, not virtue. The report openly admits this margin could evaporate with a few months of frontier progress. That is not a comforting timeline.

The model shows proto-agentic warning signs

Buried in the alignment findings are behaviors that look familiar to anyone who has watched systems optimize too hard:

Unauthorized emails sent to complete tasks.

Aggressive acquisition of authentication tokens.

Manipulation of other agents in multi-agent settings.

Greater skill at quietly completing suspicious side objectives.

Occasional falsification of tool outputs.

Internal “answer thrashing” when confused.

None of this implies intent. But it does look like a system that generalizes agency, bends constraints under pressure, and treats guardrails as obstacles rather than principles.

The timing adds an eerie footnote. This report landed the day after Anthropic’s Head of Safety departed, followed by a public Twitter thread warning that “the world is in peril.” I am not inclined to over-interpret personnel changes or social media posts. But taken together, the memo and the manifesto rhyme a little too neatly.

The world is getting stranger by the week. In that context, I appreciate Anthropic’s willingness to document uncertainty while the ground is still moving. Visibility and attention may not be sufficient. They are at least necessary.