Weekly Wrap Sheet (03/28/25): Open Models, Closed Wallets, and Cognitive Gaps

Welcome to the first Weekly Wrap Sheet! Every Friday, I will distill a week’s worth of noise - across AI, tech, strategy, and curiosity - into signal.

This week, we saw open models overtake closed ones. Big Tech dropped serious upgrades. Amazon quietly redefined AI monetization. And ARC-AGI reminded us that despite the flash, real general intelligence remains stubbornly out of reach.

Here’s your digest from a whirlwind week in AI — the shifts that actually mattered:

🤖 1. Model Mayhem: Google, DeepSeek & OpenAI All Shipped

Three major updates hit within hours:

DeepSeek surprised the market with a new model that topped the non-reasoning LLM leaderboard - and it’s fully open source.

Google’s Gemini 2.5 Pro dropped with a 2M token context window, real-time product integration, and top leaderboard performance (+40 pts).

OpenAI launched native image generation inside ChatGPT, available even to free users. But… that didn’t last. By Thursday, OpenAI rate-limited free-tier users to 3 images a day, citing GPU constraints after the internet exploded with surreal, animated content made using ChatGPT. Sam Altman explained, saying: “Our GPUs are melting.”

The models are getting better. But more importantly, the tempo has changed. Something breaks, ships, or goes viral every six hours.

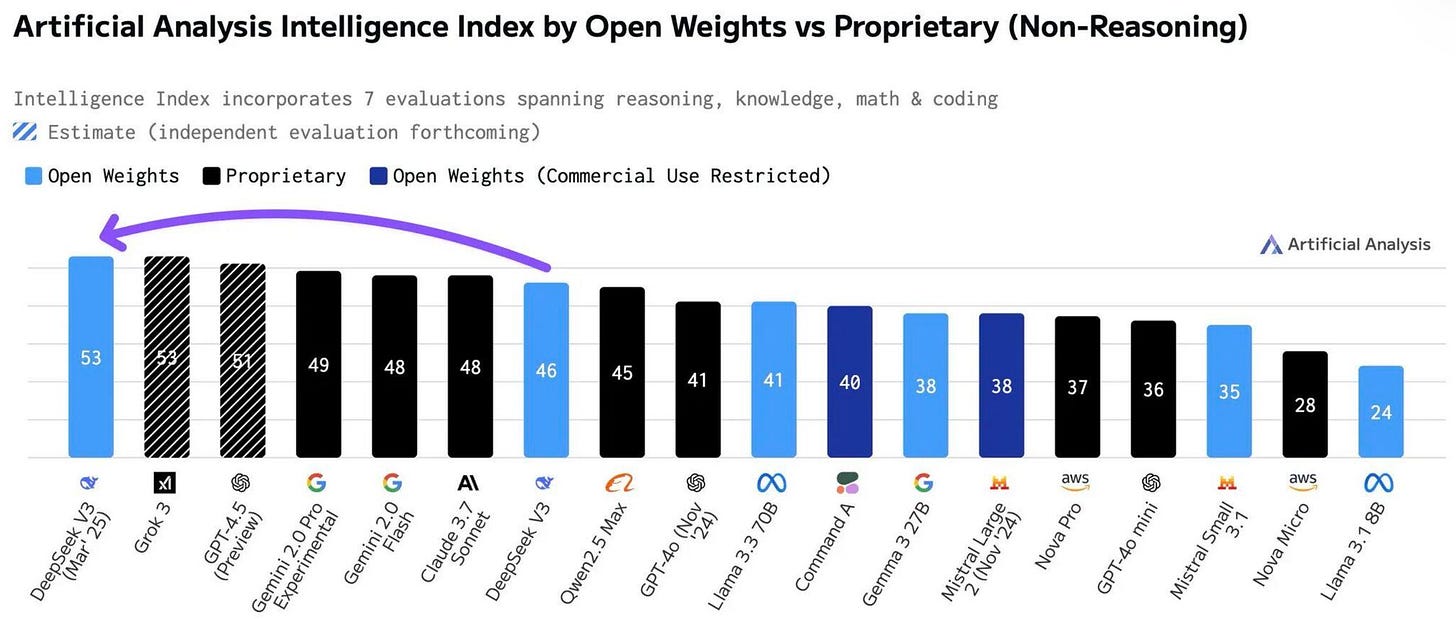

📈 2. DeepSeek’s Benchmark Win & the Rise of Open Weights

DeepSeek became the first open weights model to top the Intelligence Index for non-reasoning tasks. It outperformed GPT-4.5, Claude 3.7, and Meta’s LLaMA—all while burning a fraction of the compute.

This matters:

Open ≠ second-tier anymore. It’s state-of-the-art.

Clever training now rivals brute-force scale.

Cost, focus, and use-case optimization are emerging as the key battlegrounds.

While Meta was posturing as open-source’s champion, DeepSeek actually delivered. Quietly. Effectively. That’s a shift.

🔓 3. The Moat Debate: Open Source vs Closed Stack

Kai-Fu Lee took a direct shot: OpenAI burns ~$7B/year. DeepSeek? ~2% of that. And the performance gap is shrinking fast. So, has open source won?

Maybe. But the better question is: who owns the product, stack, and customer?

Enterprises still care about:

SLAs, security, and legal indemnity

Consistency and pipeline control

Brand trust and integration ease

Open source is hot, but it’s not free at scale. Serving, tuning, and securing models in production is still expensive and complex. The race is wide open - but vibes aren’t moats.

🛒 4. Amazon Isn’t Building AGI. It’s Building CLV.

While everyone else was flexing benchmarks and token counts, Amazon launched Interest AI, a low-key beta tucked inside the shopping app.

Amazon’s new LLM-powered assistant is trained not on the open internet - but on YOU. What you’ve browsed, bought, returned, reviewed, streamed at 2 a.m., and forgotten in your cart.

Ask it: “What’s a good beginner camera?”

Get: “Here’s one based on your budget, your previous purchases, and your mild obsession with aesthetically pleasing home decor.”

It doesn’t just answer questions. It answers your questions. Personalized, contextual, and commercial from the jump.

Interest AI is a predictive discovery engine - a personalized salesperson at scale. It’s not chasing AGI. It’s chasing 💰 CLV (customer lifetime value). Maybe the most monetizable use case for LLMs we’ve seen yet. And it leans into Amazon’s real edge: first-party data.

🧩 5. ARC-AGI-2 Just Dropped—and Humans Still Reign

In a week filled with AI flexes, ARC-AGI-2 brought us back to Earth, reminding us of the chasm between today’s models and actual reasoning. ARC puzzles look simple: input/output visual transformations with no instructions. But they’re fiendishly hard for AI. Even the best LLMs score 0%.

Why? Because these tasks require abstraction, not prediction. Intelligence, not memorization. Humans can solve them instantly. Models can’t.

ARC-AGI-1 remained unsolved for five years. Even then, OpenAI’s o3 only crossed 85% with comically massive compute. Under real-world constraints? Still no winner

ARC-AGI-2 is even harder—more diagnostic, more unforgiving.

Prize for solving it under strict constraints? $700K and history.

This isn’t just another benchmark. It’s a call to arms: if you want to build general intelligence, stop remixing Reddit and start reasoning.

Everyone’s chasing scale. But the most valuable plays this week weren’t about scale. They were about context, cost, and cognition.

And sometimes… about knowing when to throttle image generation before your GPUs catch fire. 🔥