🧾Weekly Wrap Sheet (05/16/2025): Brainwaves, Buy Buttons & Boardroom Power Plays

This week: OpenAI ships, DeepMind thinks, Perplexity shops, Microsoft renegotiates, and Apple listens to your thoughts

🎬 TL;DR

Microsoft and OpenAI are renegotiating tech’s most lucrative situationship

Perplexity turned search into a storefront, hinting at the future of AI-native commerce.

DeepMind’s AlphaEvolve is quietly inventing new algorithms - and re-writing math history. It’s not AGI, but it might optimize the infrastructure that trains it.

Apple is going straight for your neural cortex, teasing a post-touchscreen world.

OpenAI’s Codex Agents made coding weird again. Not suggestions, but shippable diffs. Devs won’t disappear - but boilerplate will. Welcome to the era of keystroke-free commits.

💸 Microsoft & OpenAI: It’s Complicated

No one does entanglement like Microsoft and OpenAI.

OpenAI’s restructure last week - designed to clean up its cap table, remove profit caps, and convert to a traditional equity model - sounds like standard pre-IPO housekeeping. Except there’s a catch: Microsoft has to sign off. And they’re… conflicted.

Back in 2019, Microsoft got a golden deal: $13B for 20% of OpenAI revenue through 2030. Now OpenAI wants to cut that to 10% and convert them to a common equity holder.

Microsoft isn’t thrilled. They want something else: long-term access to IP, especially the fancy stuff - like $20K/month agents that do PhD-level work. They also want continued use of OpenAI tech, which has sparked concerns internally at OpenAI about how that IP is used, where it goes, and who profits from it.

Both sides know they can’t quit each other - OpenAI still relies on Azure. Microsoft still needs OpenAI’s models. But they’re starting to build escape hatches.

OpenAI is exploring deals with Oracle and SoftBank for compute

Microsoft is building in-house models and hiring OpenAI competitors

And yet, Microsoft still wins either way:

It hosts OpenAI (Azure)

It sells OpenAI (Copilot across Office, GitHub, Windows)

It competes with OpenAI (internal model work)

It underpins the AI ecosystem (every hot codegen startup runs on GitHub + forks VSCode)

And it gets paid - no matter which model wins.

Call it the most lucrative love-hate story in tech. As OpenAI grows up, Microsoft must decide whether to stay parent, partner, or become predator.

🛍️ Perplexity Collapses the Funnel

Perplexity’s latest experiment is deceptively simple: in-chat commerce.

Ask about a hiking backpack. Get a recommendation. Tap to buy. All in the same flow, powered by PayPal integration. No tabs. No links. No ad detour.

It sounds obvious but it changes the game. Because for 20+ years, search monetized through attention. AI doesn’t want your attention. It wants to close the loop.

This is the beginning of a new monetization model for seach:

From CPCs to GMV cuts

From “10 blue links” to “just buy this”

From attention arbitrage to transaction capture

And it raises a trillion-dollar question: What if search was a point of sale?

Perplexity isn’t alone - Amazon’s building Rufus, OpenAI is experimenting with shopping, Meta’s pushing commerce in WhatsApp - but most of these shopping experiences break the interaction flow by giving you links and making you switch apps to complete the purchase. Perplexity’s version is frictionless and likely to soon become industry standard.

🔁 AlphaEvolve: It’s Turtles All The Way Down

DeepMind dropped something seismic with almost zero fanfare - AlphaEvolve, a Gemini-powered agent that discovers new algorithms from first principles. Not assistive, but inventive.

It’s not a lab toy. AlphaEvolve has been quietly running inside Google for a year. The results:

A scheduling heuristic now embedded in Borg, Google’s cluster manager, recovers 0.7% of global compute - an absurd gain at hyperscaler scale.

It redesigned part of a TPU chip, optimizing circuit logic in a way human engineers hadn’t.

It found a 23% speedup in a key Gemini kernel, cutting total training time by 1%.

And it just broke a 56-year-old matrix multiplication record, beating Strassen’s 1969 algorithm - a milestone in theoretical CS.

This Reddit user perflectly captures the enormity of the last breakthrough.

This isn’t AGI. But it’s AGI-adjacent. Because AlphaEvolve does something few systems can: it improves the infrastructure that will train its successors.

It’s not the singularity (yet), it's sort of an infrastructure loop. ➿ The compiler writes a better compiler. The AI optimizes the hardware that trains the AI. It's turtles all the way down, but the turtles have GPUs now. 🐢

Anyway, OpenAI will announce a video-to-PowerPoint generator tomorrow and everyone will lose their minds again. They’re masters of narrative and shape the cultural conversation like no one else. But don’t sleep on DeepMind- they're not good at making headlines, but they know how to make history. Keep an eye on the quiet ones.

🧠 Apple Taps Into Your Brain

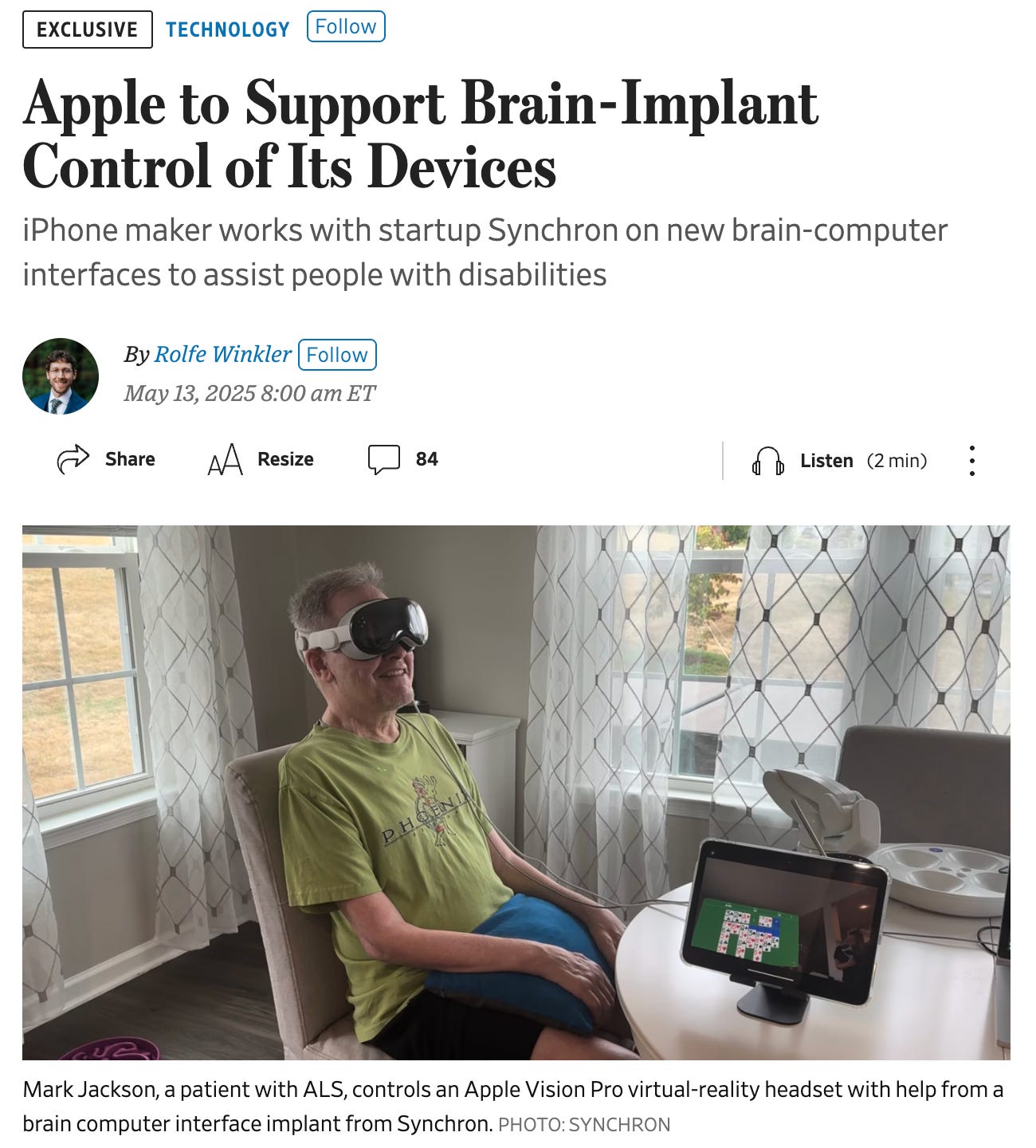

Apple just entered the chat on brain-computer interfaces. In partnership with Synchron, Apple is building native support for neural input. That means, someday, you could control your iPhone, iPad, or Vision Pro with your thoughts. No touch, no voice, no gesture - just intention. Black Mirror who?

The first use case is accessibility. A man with ALS in Pittsburgh used his implant to climb a mountain in the Swiss Alps - on his Apple VR headset. That’s extraordinary. That’s the best of what tech can be.

Synchron's device - basically a stent in your brain’s motor cortex - lets users navigate Apple devices with thought alone. And while Neuralink gets the headlines (and the electrodes inside your brain), Synchron is shipping with FDA approval likely before 2030.

Once cognitive input is native to OS-level design, it won't stop at accessibility. It could be the on-ramp to a post-touchscreen world. 📵 Or, depending on how you feel about brain implants, a slippery slope to techno-telepathy. Apple’s greatest UI breakthrough might not be a better screen - it might be no screen at all. If intent becomes input, what even is UX?

In the next decade, swiping could feel as dated as dialing. Apple could be building the last interface we'll ever need, moving their classic 'walled garden' to inside our head.

🛠️ Software Engineering Mutates

OpenAI’s Codex Agents are here. Not a chatbot, not autocomplete - but autonomous software agents that actually do the work. Given a scoped task, Codex spins up a container, reads your repo, executes the job, validates results, and returns a diff. Not a suggestion. A deliverable.

Two primitives define it:

▶️ Code: “Add pagination to this table.”🔎 Ask: “How is this error handled across routes?”

Each job runs in a sandboxed runtime with logs and rollback support. It’s not just CoPilot++ - it’s an API-native developer agent built for repeatability, reliability, and actual output.

Codex shifts the dev toolchain from assistive to autonomous. It turns software development into orchestration. And with OpenAI reportedly acquiring Windsurf (an AI-native IDE startup), the contours of the strategy sharpen: Codex handles execution. Windsurf handles integration. If Codex is the contractor, Windsurf is the construction site.Together, they’re going after the entire SDLC. For OpenAI, this both a defensive move (avoid becoming a commoditized model vendor) and an offensive one (own the agent runtime, IDE, and dev surface).

The future isn’t fewer engineers - it’s fewer keystrokes. Codex doesn’t kill engineering. It just ends the illusion that writing code was ever the hard part.